High Cardinality Blog Series - Part 3

By

Harold Lim

Published on

Aug 6, 2024

Table of Contents

In our last blog posts on high cardinality data in observability, we discussed the fundamentals of observability and its growing importance. Now, we'll delve deeper into understanding how high cardinality manifests across all four types of observability data streams: metrics, events, logs, and traces.

In this blog, we will focus only on metrics as the cardinality behaves differently in metrics vs. other types of observability data. In the next blog, we will cover events, logs, and traces.

Metrics are often numeric values, such as counts, averages, or percentages, computed over a set timeline to present overall performance or behavior of a system. For example, a metric could measure the number of page views per minute or the average response time of a server.

Metrics play a critical role in detecting anomalies, outliers, and performance trends of a system over time. In contrast, events, logs, and traces capture discrete system occurrences such as errors, startup/shutdown events, and system state changes, emphasizing qualitative details vs. continuous numerical measurements across fixed time intervals typical of metrics. As a result, high cardinality can be more pronounced in metrics and requires more careful management compared to events, logs, and traces.

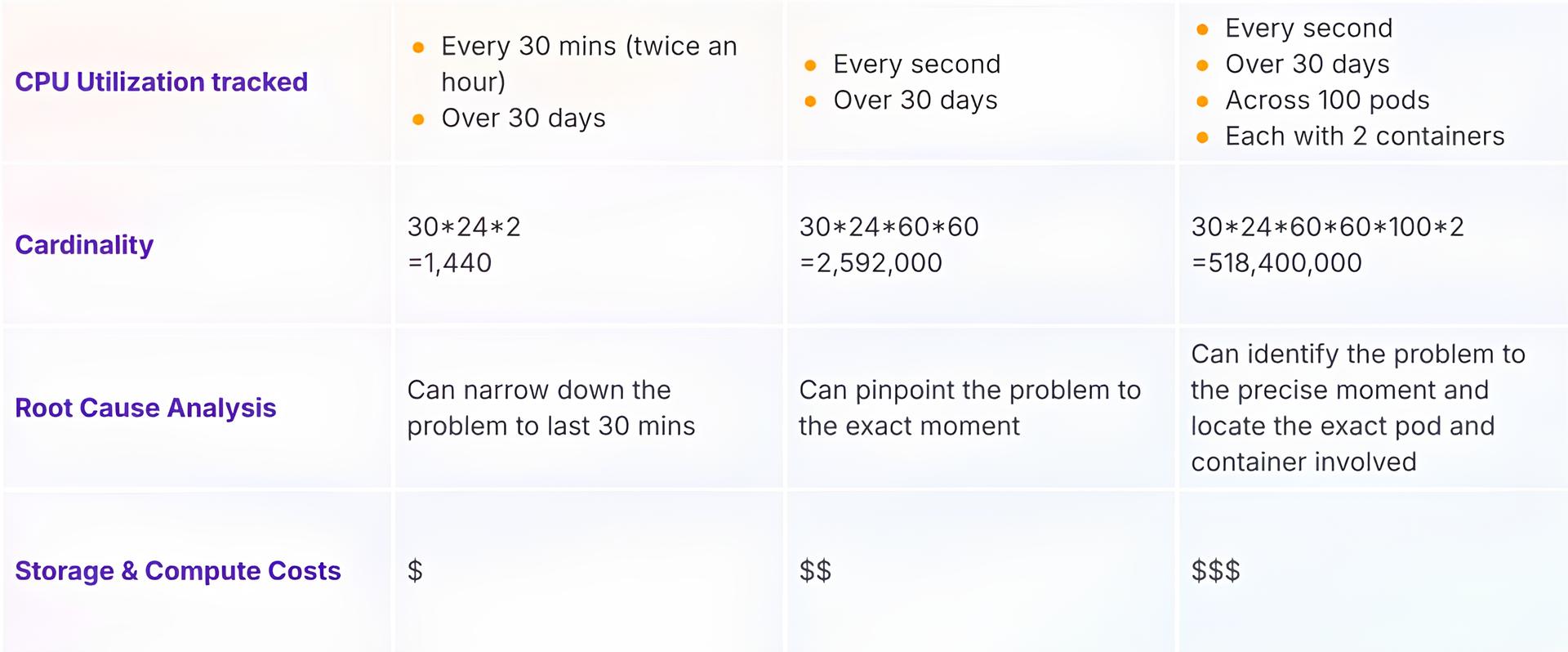

In metrics monitoring, the frequency of data capture intervals and the inclusion of various attributes or dimensions associated with the metric elevates its cardinality. For instance, monitoring CPU usage per second and correlating it with pod or container IDs significantly increases data complexity and cardinality. Tracking these details every second, rather than less frequent intervals like every hour, further increases the volume.

Table: Understanding High Cardinality in Metrics: Balancing Granularity and Costs

While high cardinality data has the detailed granularity and richness to provide accuracy and precision in pinpointing exact system issues, it can also lead to challenges such as inflated storage requirements and higher computational overheads during data aggregation and analysis.

Balancing data richness with resource efficiency is crucial. In future posts, we’ll discuss strategies for achieving this balance. Meanwhile, here are some common pitfalls to avoid to prevent unnecessary cardinality accumulation:

Improper Instrumentation

Improper instrumentation occurs when tags or attributes that are not used in dashboards or alerts are included in metrics. For example, adding an IP address to every request, irrelevant for monitoring purposes, can increase cardinality unnecessarily.

Histogram Buckets

Histograms are used to track the distribution of values, such as response latencies, within different buckets or bins. Using too fine-grained histogram buckets can lead to high cardinality, especially when tracking percentiles for metrics like response latencies. Therefore, it's crucial to design histogram buckets based on the expected value range and required precision, as adding unnecessary buckets will only increase cardinality.

Transient Environment Tags

In dynamic environments like Kubernetes, monitoring metrics often include tags related to infrastructure or environment variables. However, poor choice of tags like container IDs or pod IDs can significantly contribute to high cardinality, particularly in environments with short-lived containers or pods that are frequently created or destroyed. This issue is particularly pronounced in dev/test environments where CI/CD workflows continuously generate new ephemeral resources to test various scenarios. To address these challenges effectively, it is advisable to utilize stable identifiers such as job names or service names for tracking purposes, rather than transient tags like container IDs that can rapidly become obsolete.

By addressing these sources of high cardinality through proper instrumentation, appropriate histogram buckets, and the use of relevant infrastructure and environment tags, organizations can effectively manage cardinality and gain accurate analysis and insights without unnecessary complexity.

Stay tuned for our next blog, where we will dive into managing high cardinality across events, logs, and traces.